Probability and complex function: Unit II: Two dimensional random variables

Covariance, correlation and regression

Two dimensional random variables

When two or more random variables are defined on a probability space, it is useful to describe how they vary together, that is, it is useful to measure the relationship between the variables.

COVARIANCE, CORRELATION AND REGRESSION

When

two or more random variables are defined on a probability space, it is useful

to describe how they vary together, that is, it is useful to measure the

relationship between the variables. A common measure of the relationship

between two random variables is the covariance. To define the covariance, we

need to describe the expected value of a function of two random variables h (x,

y). The definition simply extends that used for a function of a single for a

functional random variable.

1. COVARIANCE

If

X and Y are random variables, then co-variance between them is defined as,

Cov

(X, Y) = E [(X - E(X)) (Y - E(Y))]

=

E [XY - XE(Y) - YE(X) + E(X) E(Y)]

=

E [XY] - E(X) E(Y) - E(X) E(Y) + E(X) E(Y)

=

E (XY) - E(X) E(Y)

Cov

(X, Y) = E (XY) - E(X) E(Y)

Note:

If X and Y are independent, then Cov (X, Y) = 0

If

X and Y are independent, then E [XY] = E(X). E(Y)

→

Cov (X, Y) = 0

(i)

Cov (aX, bY) = ab Cov (X,Y)

Cov

(aX, bY) = E [(ax) (bY)] - E (aX) E (bY)

=

ab E(XY) - ab E(X) E(Y)

=

ab [E(XY) - E(X) E(Y)] = ab Cov (X, Y)

(ii)

Cov (X+a, Y + b) = Cov (X, Y)

Cov

(X + a, Y + b) = E [(X + a) (Y + b)] - E(X + a) E (Y+b))

=

E [XY + bx +aY + ab] - [E(X) + a] [E(Y) + b]

=

E(XY) + b E(X) + a E(Y) + ab - E(X) E(Y) - aE(Y) - b E(X) - ab

=

E(XY) - E(X) E(Y) = Cov (X, Y)

(iii)

Cov (aX+ b, cY + d) = ac Cov (X, Y)

Cov

(ax + b, cY + d) = E [(aX + b) (cY + d)] - E(aX + b) E (cY + d)

=

E [ac XY+ ad X + bc Y + bd] - [aE(X) + b] [cE(Y) + d]

=

ac E(XY) + ad E(X) + bc E(Y) + bd - ac E(X) E(Y) - ad E(X) - bc E(Y) - bd

=

ac [E (XY) - E(X) E(Y)] = ac Cov (X, Y)

(iv)

V (X1 + X2) = V (X1) + V (X2) +

2Cov (X1, X2)

V

(X1 + X2) = E [(X1 + X2)2]

− [E (X1 + X2)]2

=

E (X12 + 2X1 X2 + X22)

- [E (X1) + E (X2)]2

=

E (X2)+2E (X1 X2) + E (X2) - [E (X1)]2 - [E(X2)]2 -2 E(X1) E(X2)

=

E(X) - [E(X1)2 + E(X2) - [E (X2)]2

+ 2 [E(X1 X2)

=

E(X1) E(X2)]

=

V (X1) + V (X2) + 2 Cov (X1, X2)

(v)

V (X1 − X2) = V (X1) + V (X2) - 2

Cov (X1, X2)

If

X1 and X2 are independent then ±

V

(X1 ± X2) = V (X1) ± V

(X2)

2. CORRELATION ANALYSIS

A

distribution involving two variables is known as a bivariate distribution. If

these two variables vary such that change in one variable affects the change in

the other variable, the variables are said to be correlated. For example, there

exists some relationship between the height and weight of a person, price of a

commodity and its demand, rainfall and production of rice, etc. The degree of

relationship between the variables under consideration is measured through the

correlation analysis. The measure of correlation is called as the correlation

co-efficient or correlation index. Thus, the correlation analysis refers to the

techniques used in measuring the closeness of relationship between the

variables. anoislomoo Isinsq ans

Types

of correlation :

There

are three important ways of classifying correlation viz., ad smarald aldsinsy

15dio odi ni ognsdo lo ingoms on

1.

Positive and Negative

2.

Simple, partial and multiple

3.

Linear and non-linear.

Positive

and Negative correlation :

If

the two variables deviate in the same direction i.e., if the increase in one

variable results in a corresponding increase in the other or if the decrease in

one variable result in a corresponding decrease in the other, then the

correlation is said to be direct or positive. For example, the correlation

between the height and weight of a person, correlation between the rainfall and

production of rice, etc. are positive.

If

the two variables constantly deviate in the opposite directions, i.e., if the

increase in one variable results in a corresponding decrease in the other or if

the decrease in one variable results in a corresponding increase in the other,

the correlation is said to be inverse or negative. The correlation between the

price of a commodity and its demand, correlation between the volume and

pressure of a perfect gas, etc., are negative.

Correlation

is said to be perfect if the deviation in one variable is followed by a

corresponding and proportional deviation in the other.

Simple,

partial and multiple correlation :

If

only two variables are considered for correlation analysis, it is called a

simple correlation. When three or more variables are studied, it is a problem

of either multiple or partial correlation.

In

multiple correlation, three or more variables are studied simultaneously. For

example, the study of relationship between the yield of rice per hectare and

both the amount of rainfall and the usage of fertilizers is a multiple

correlation.

When

three or more variables are involved in correlation analysis, the sigm correlation

between the dependent variable and only one particular independent variable is

called partial correlation. The influence of other independent variable is

excluded.

For

example, the yield of rice is related with the application of fertilizers and

the rainfall. In this case, the relation of yield to fertilizer excluding the

effect of rainfall, the relation of yield to rainfall excluding the usage of

fertilizer are partial correlations.

Linear

and non-linear correlation :

If

the amount of change in one variable tends to bear constant ratio to the amount

of change in the other variable, then the correlation is said to be linear.

For

example, if

the

variation between X and Y is a straight line.

A

correlation is said to be non-linear or non-linear or curvi linear if the

amount of change in one variable does not bare a constant ratio to the amount

of change. in the other variable. For example, if rainfall is doubled, the

production of rice would not necessarily be doubled.

Methods

of studying correlation :

The

following are some of the methods used for studying the correlation.

(i)

Scatter diagram method

(ii)

Graphic method

(iii)

Karl pearson's co-efficient of correlation

(iv)

Rank method

(v)

Concurrent deviation method

(vi)

Method of least squares.

Karl

Pearson's co-efficient of correlation

Let

X and Y be given random variables. The Karl Pearson's co-efficient of

correlation is denoted by rXY or r(X, Y) and defined as

Note

that correlation co-efficient always lies between -1 to +1.itulo2 Note: Two

random variables with non zero correlation are said to be correlated.

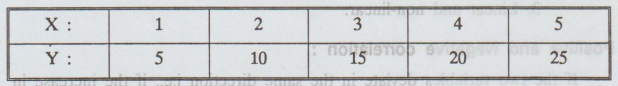

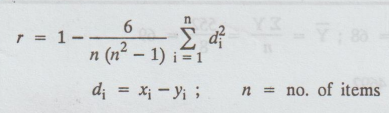

3. RANK CORRELATION

Let

us suppose that a group of 'n' individuals is arranged in order of merit or

proficiency in possession of two characteristics A and B. These ranks in the

two characteristics will, in general, be different. For example, if we consider

the relation between intelligence and beauty, it is not necessary that a

beautiful individual is intelligent also.

If

(Xi, Yi), i = 1, 2, 1, 2, ... n are the ranks of the

individuals in two characteristics A and B respectively, then the rank

correlation co-efficient is given by,

where

di is the different between the ranks. This formula is called Karl

Pearson's formula for the rank correlation co-efficient.

4. REPEATED RANKS

If

any two or more individuals are equal in any classification with respect to

characteristic A or B, or if there is more than one item with the same value in

the series then Spearman's formula for calculating the rank correlation

coefficients breaks down. In this case common ranks are given to the repeated

ranks. This common rank is the average of the ranks which these items would

have assumed if they are slightly different from each other and the next item

will get the rank next to the ranks already assurned. As a result of this,

following adjustment or correction is made in the correlation formula.

In

the correlation formula, we add the factor m(m2 – 1 ) / 12 to ∑d2

where m is the number of times an item is repeated. This correction

factor is to be added for each repeated value.

Probability and complex function: Unit II: Two dimensional random variables : Tag: : Two dimensional random variables - Covariance, correlation and regression

Related Topics

Related Subjects

Probability and complex function

MA3303 3rd Semester EEE Dept | 2021 Regulation | 3rd Semester EEE Dept 2021 Regulation